Introducing Our New Project: Below the Algorithm

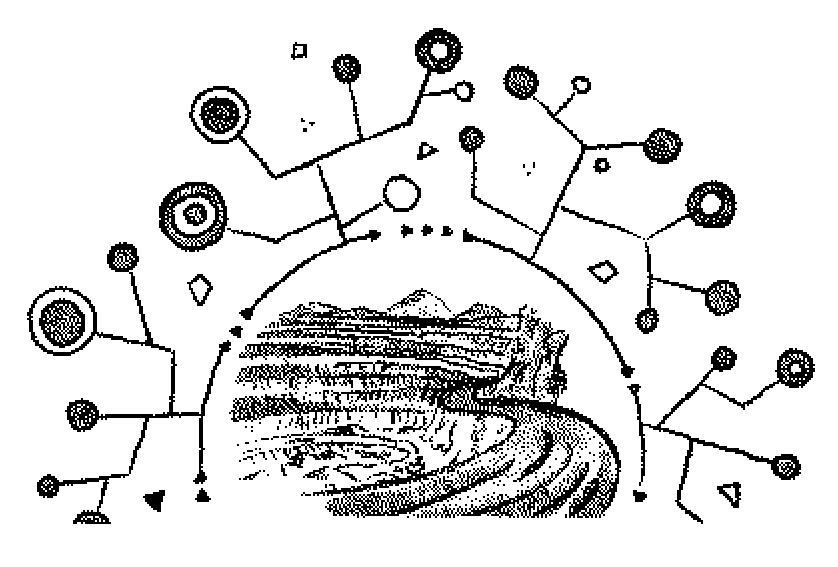

Behind every battery, every circuit, and every device are minerals like lithium, cobalt, nickel, and rare earth elements. Too often invisible, these materials come from specific places and leave lasting impacts on the people who live alongside them. A recent analysis notes that “the increasing demand for AI services is driving up extraction for more minerals, energy and water, a trend that is expected to escalate in the incoming years”. This project is about tracing the origins of the materials that power artificial intelligence to better understand its impacts. The idea is to map out the global mining sites tied to materials used to power AI, and then look deeper into who’s affected, who benefits, and how this all plays out environmentally and socially.

There's a lot of talk about the promises of AI, how it’ll help us solve big problems, make life easier, even save the planet. But what often gets left out is the very real environmental and human cost behind the scenes. Most of us don’t think about the mines that make AI possible, or the communities living near them. And yet, these are the places where the AI story begins. That’s where planetary justice comes in. The planetary justice framework reminds us to ask who’s paying the price for innovation, who gets to decide how resources are used, and whether we are building a tech future that’s fair, or just repeating old patterns of exploitation?

For instance, in Argentina’s Salar de Olaroz, one ton of lithium requires approximately 2 million liters of water, draining fragile high-altitude ecosystems already struggling with scarcity. In the Democratic Republic of the Congo, mining for cobalt and copper has triggered forced evictions, assaults, and livelihood losses in local communities, often carried out with little regard for proper compensation or consultation.

From Ambika

Through my work in international development and the private sector, I have seen firsthand how extractive industries profoundly impact local communities, eroding health, livelihoods, and social cohesion. These burdens often fall unequally, for example, women are frequently excluded from decisions about land or compensation, yet they are hit hardest when mining harms water access or disrupts their ability to provide for their families. I see the global AI supply chain as a system shaped by longstanding inequalities, where a few powerful actors capture most of the benefits, while communities at the source shoulder the costs. Mapping these extraction sites is a way to give visibility to the stories behind the minerals and questioning the narrative of (increasingly nationalistic) AI progression. For me, this work is about making sure AI doesn’t simply repeat the injustices of the past, but instead has the potential to contribute to a more just and equitable future.

From Beatrice

As someone who has always encountered technology as a finished product, it was a real shift for me to think about the materiality of software, of how everything that I perceive as ‘cloud’, as ‘wireless’, as ‘contactless’ and, most of all, as AI, is extremely material – its materiality is just hidden from end users. In school, growing up, I was shown pictures of computers from the 1930s, big machines that would take up a whole room. Our computers are smaller, yes, but they run on data centres that take up whole buildings. The extraction of the material needed for this infrastructure is the first building block of the global economy, and it is essential to identify the power dynamics lying at its heart. Personally, this project also serves for me to hold myself accountable about my role in the global economy, and how I benefit from it, as a person born and living in the Global Minority and removed from the AI supply chain in my daily life.

Why “Below the Algorithm”?

Similarly to our Rooted Clouds project, Below the Algorithm aims to expose these material dependencies, showing how behind every AI model there is a tangible material world: lithium in batteries, cobalt in circuits, rare earth elements in processors, water used in mining, land reshaped for extraction, and labor powering it all. If we are to take AI seriously, we must trace the true costs of the raw materials that make it possible. We need to know where these minerals come from, how they are extracted, who benefits, and who bears the burdens. From there, we can work together, across communities, experts, and industries, to develop measures that are both consistent and sensitive to local realities, ensuring that the pursuit of AI does not come at the expense of people or the planet.

What We’re Doing

We’re building a public, living evidence platform that goes far beyond geolocation. Each extraction site is traced through the AI Supply Chain Impact Framework–for every site, we analyze who bears the burdens and who captures the benefits; how land, water, labor, and more-than-human worlds are affected. This will also be an opportunity to pilot and test the framework, making adjustments and expanding it as needed.

Alongside this broad, comparable dataset, we will select a few mining sites and conduct field research to develop in-depth case studies, running in parallel to the higher-level information gathering for all sites. We will combine geospatial layers with impact indicators and community testimony, to then translate findings into practical pathways—media dossiers, policy briefs, and community toolkits—so documented harms can move into action.

Who This Is For

This work is for people who need reliable grounding to hold AI’s supply chains to account. Frontline and Indigenous communities can connect lived experience to specific supply chains and have it documented in ways that support negotiations or claims. Organizers and civil society groups can use comparable information to plan and coordinate work across regions. Journalists and investigators can follow materials and money from pit to product. Lawyers and advocates can assemble evidence for complaints, mediation, or litigation. Policymakers and regulators can see where harms cluster and where oversight is missing. Researchers can help ground-truth sites and track change over time. And companies and investors prepared to engage can identify their exposure and work with affected communities to address it.

Anticipated Challenges

We already know this won’t be easy nor straightforward, and that it’s going to require so much collaboration across sectors and domains. Some of the things we expect to run into include:

Missing or vague data: A lot of mining happens in places where information isn’t publicly shared or is intentionally kept opaque.

Tracing responsibility: Of course, not all mining from any mining site is all aimed for AI development. We anticipate that figuring out how much of a mine’s output is actually going toward AI instead of other purposes may be a challenge. (We talk about this in our AI Supply Chain Impact Framework).

Building trust: Reaching local communities and stakeholders takes time, care, and respect. We don’t want to rush or assume trust or access.

Staying current: The materials needed for AI change fast. For instance, as chipmakers work to reduce their reliance on rare earth elements due to supply risks or political tensions, demand may pivot toward other materials like gallium or silicon carbide.

Risk: In some regions, even documenting a site can be politically sensitive. We’ll need to make safety and ethics a priority. For instance, we can work closely with local partners to understand risks, ensure we are obtaining informed consent, and focus on avoiding actions that could endanger communities or researchers. That being said, it might still be challenging to obtain information in a safe and ethical manner.

This diary is a step forward in remaining open to sharing how our research evolves and we’re open to collaborating and hearing from people who have experience mitigating these challenges.

What’s next

We’ll keep updating this diary as things unfold: what we’re learning, what we’re rethinking, and how our methods evolve along the way. The goal is to be as transparent about the process as possible. Please reach out if you’re interested in this work, we’d love to hear from you and get your perspective. You can reach us at ambika@aiplanetaryjustice.com.

More soon!

Ambika and Beatrice